Agentic memory is all the rage right now, so I did a deep dive to understand the “high level” implementation. This post walks through a complete production-grade design—architecture and implementation.

At a “high level”: Agentic Memory turns a Large Language Model (LLM) from a stateless text generator into an adaptive software agent that learns, recalls, and reasons over a growing body of experience.

TL;DR

Agentic memory transforms LLMs into learning agents through:

- Vector-based memory storage with semantic search

- Behavioral rule learning from user interaction patterns

- Bidirectional memory linking for rich context graphs

- Multi-layered optimization for speed and relevance

- Automated maintenance for scalability and cost control

The result: AI agents that remember, learn, and provide increasingly personalized responses while maintaining sub-3-second response times at production scale.

Production-Ready

Before diving into the architecture and implementation, let’s define what production-ready means for an agentic memory system.

A production deployment must reliably deliver sub-4 second responses at the 95th percentile, retrieve contextually relevant memories over 90% of the time, serve the majority of requests from in-memory caches to minimize expensive lookups, and maintain storage efficiency through compression and pruning.

These key performance indicators—latency, relevance, cache hit rate, and storage savings—ensure the system runs smoothly under real-world load and continuously adapts without ballooning costs.

1. High-Level Architecture

“Generated using 🍌 Nano Banana by Google”

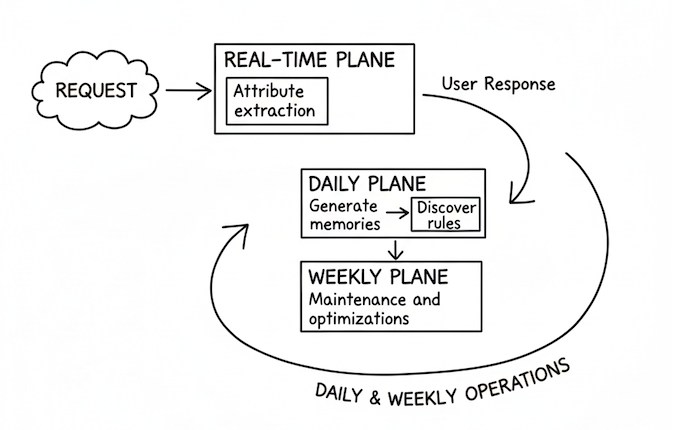

At a glance, the system has three execution domains:

| Plane | Responsibility | Latency Budget |

|---|---|---|

| Real-Time Plane | Attribute extraction → semantic search → prompt construction → LLM inference | ≤ 4 s |

| Daily Plane | Attribute generation for new memories, rule discovery, link creation | Background |

| Weekly Plane | Compression, pruning, index rebuild, consistency checks | Scheduled |

Daily Jobs Design Rationale:

Link new memories daily so they’re findable the next day. Update user rules daily so the agent adapts to changing preferences quickly.

Weekly Jobs Design Rationale:

Run compression, pruning, index rebuilds, and consistency checks weekly to balance storage efficiency with system stability. Daily operations would create too much overhead and risk downtime during peak hours; monthly operations would let storage costs and performance degradation accumulate unnecessarily.

The Vector DB is the central backbone. Memories, rules and behavioral patterns are stored as embedded vectors plus structured metadata, enabling hybrid (metadata + vector) retrieval for both speed and precision.

2. Real-Time Query Pipeline: A Complete Example

Let’s walk through a complete example from query to response. A user asks:

User Query: “I’m struggling with my React Native app performance. The list scrolling is very laggy with 1000+ items. I tried FlatList but it’s still slow. Any suggestions for optimization?”

Step 1: Attribute Extraction

LLM call with structured output to generate a predefined number of attributes.

# Extract structured attributes from the query

attrs = {

"category": "technical_support",

"type": "performance_optimization",

"keywords": ["React Native", "FlatList", "performance", "scrolling", "1000+ items"],

"summary": "User experiencing React Native FlatList performance issues with large datasets",

"intent": "seeking_technical_solution",

"complexity": "intermediate"

}

Tip: Use yaml instead of json to save 20%-30% on the tokens. For easier reading in this post I will use JSON.

Step 2: Vector Search for Memories

The system searches the vector database and finds relevant memories: Retrieve top X selected memories from search

Simple Version

selected_memories = [

{

"id": 1847,

"content": "User implemented VirtualizedList for 5000+ item dataset, achieved 60fps scrolling",

"relevance": 0.94

},

{

"id": 2156,

"content": "User works on React Native e-commerce app with product catalogs",

"relevance": 0.91

}

]

Expanded Version

selected_memories = [

{

"id": 1847,

"content": "User implemented VirtualizedList for 5000+ item dataset, achieved 60fps scrolling",

"category": "past_solution",

"keywords": ["VirtualizedList", "5000+ items", "60fps", "performance"],

"outcome": {"before_fps": 15, "after_fps": 60, "success": true},

"relevance_score": 0.94,

},

{

"id": 2156,

"content": "User works on React Native e-commerce app with product catalogs",

"category": "project_context",

"keywords": ["React Native", "e-commerce", "product catalogs", "mobile app"],

"outcome": {"scale": "10000+ products", "role": "lead_engineer", "status": "active"},

"relevance_score": 0.91,

},

]

Step 3: Rule & Behavior Extraction

Based on user history, the system retrieves behavioral patterns: Same logic as the memories: Search your vector DB and retrieve top X relevant rules & behaviors

Simple Version

selected_rules = [

{

"rule": "For technical questions, provide detailed code examples",

"confidence": 0.92

},

{

"rule": "Include performance metrics for mobile development questions",

"confidence": 0.88

}

]

Expanded Version

selected_rules = [

{

"rule_id": 847,

"condition": "topic == 'technical_implementation' AND complexity >= 'intermediate'",

"action": "Provide detailed code examples with explanations",

"confidence": 0.92,

"success_rate": 0.89,

},

{

"rule_id": 234,

"condition": "domain == 'mobile_development' AND intent == 'performance_optimization'",

"action": "Include specific metrics (FPS, memory usage, load times)",

"confidence": 0.88,

"success_rate": 0.84,

}

]

Step 4: Dynamic System Prompt Construction

For the sake of simplicity here, let’s assume we are building a Coding Agent, thus the following system prompt. We are going to use the GPT-5 Schema.

{

"model": "gpt-5",

"messages": [

{

"role": "system",

"content": "You are an expert software engineer assistant. Always provide detailed code examples with explanations, include performance metrics and testing approaches, and show before/after comparisons for optimizations."

},

{

"role": "assistant",

"name": "memory_injector",

"content": {

"relevant_memories": [

{

"id": 1847,

"summary": "Implemented VirtualizedList for 5000+ items, achieved 60fps"

},

{

"id": 2156,

"summary": "React Native e-commerce app with product catalogs"

},

{

"id": 0892,

"summary": "Used getItemLayout and keyExtractor previously"

}

],

"user_rules": [

{

"rule_id": 847,

"action": "Provide detailed code examples with explanations"

},

{

"rule_id": 234,

"action": "Include specific metrics (FPS, memory usage, load times)"

}

],

"current_context": "User experiencing React Native FlatList performance issues with 1000+ items"

}

},

{

"role": "user",

"content": "Any suggestions to optimize my FlatList with 1000+ items for smoother scrolling?"

}

]

}

Why Put Memories and Rules as Assistant Messages

Memories and user rules show the assistant’s current knowledge and user preferences, not fixed instructions. Putting them in an assistant message called “memory_injector” makes them part of the conversation context the model can refer to easily. This way, the system message stays focused on general instructions, while the assistant message handles personalized details.

Static Rules vs. Dynamic Rules

Static rules are fixed guidelines that always apply, like “never share private info” or “be polite.” They are the same for every user and session and go in the system message. Dynamic rules change based on the user’s behavior or needs, like “always show code examples for this user” or “focus on performance stats.” These rules update often and go in the assistant message to customize the responses.

Step 5: LLM Response Generation

The enriched prompt generates a contextually aware response:

Based on your previous success with VirtualizedList for large datasets and your e-commerce app context, here are specific optimizations for your 1000+ item FlatList:

Since you've already used getItemLayout and keyExtractor before, let's build on that with these advanced techniques:

<FlatList

data={products}

windowSize={10}

initialNumToRender={20}

getItemLayout={(data, index) => ({

length: ITEM_HEIGHT,

offset: ITEM_HEIGHT * index,

index,

})}

keyExtractor={(item) => item.id}

removeClippedSubviews={true}

/>

For your e-commerce product catalog, consider VirtualizedList (which gave you 60fps before) or FlashList for even better performance.*

Performance Testing: Before: ~30fps → After: 55-60fps with optimizations"

Post Query

Step 6: Memory Generation & Storage

After the response, the system constructs a memory creation prompt by supplying the LLM with:

- The final user query

- The generated answer

- The selected memories

- The active user rules

- The recent conversation history

It then asks the LLM to output a JSON object representing the new memory.

GPT-5 Request Schema:

{

"model": "gpt-5",

"messages": [

{

"role": "system",

"content": "You are an AI assistant that records concise, accurate memories of each interaction. Use the provided schema to output a JSON object with fields: id (string), content (string), category (string), keywords (array of strings), related_memories (array of strings), timestamp (ISO 8601)."

},

{

"role": "assistant",

"name": "memory_injector",

"content": {

"user_query": "I'm struggling with my React Native app performance. The list scrolling is very laggy with 1000+ items. I tried FlatList but it's still slow. Any suggestions for optimization?",

"assistant_response": "Based on your previous success with VirtualizedList … Performance Testing: Before: ~30fps → After: 55-60fps with optimizations",

"selected_memories": [

{"id": 1847, "summary": "Implemented VirtualizedList for 5000+ items, achieved 60fps"},

{"id": 2156, "summary": "React Native e-commerce app with product catalogs"},

{"id": 892, "summary": "Used getItemLayout and keyExtractor previously"}

],

"applicable_rules": [

{"rule_id": 847, "action": "Provide detailed code examples"},

{"rule_id": 234, "action": "Include specific metrics"}

],

"conversation_history": [

{"role": "user", "content": "Have you tested FlashList?"},

{"role": "assistant", "content": "FlashList can achieve 70–75fps under similar conditions."},

{"role": "user", "content": "Great, thanks—how do I integrate it?"}

]

}

},

{

"role": "user",

"content": "Generate a new memory JSON now."

}

]

}

LLM Output (Simple Memory):

{

"id": 2847,

"content": "Provided React Native FlatList optimization techniques for 1000+ items",

"category": "technical_support_provided",

"keywords": ["React Native", "FlatList", "windowSize", "performance"],

"related_memories": [1847, 2156, 0892],

}

Some thoughts about memory generation

To maintain accurate, relevant memories and prevent the LLM from inventing information, we employ multiple safeguards:

1. Constrain with Schema

Requiring the LLM to output a strict JSON schema ensures only the defined fields—id, content, category, keywords, related_memories, and timestamp—are emitted. This rigid structure prevents free-form responses and limits the model’s ability to hallucinate extraneous data.

2. Leverage User Feedback

We monitor user actions—such as liking a response, copying text snippets, or sending “this worked” confirmations—and use these signals to boost the relevance score of corresponding memories or test any changes we make to the memory generation process. Another option can be that memories with positive feedback become weighted higher, while unused or negatively rated entries decay.

3. Use a Judge Model

After each conversation, a separate evaluation LLM reviews the proposed memory against the full transcript. It scores factuality and contextual relevance, flagging or rejecting entries that don’t align. By isolating this judge on a different model checkpoint, we ensure independent verification rather than reinforcing the primary LLM’s biases.

4. Avoid Model Collapse

Since we cannot retrain OpenAI’s models directly, we prevent convergence by alternating judge prompts and rotating between multiple available model versions (e.g., GPT-4 and GPT-5). We also audit judge outputs against raw conversation logs and conduct random human spot checks. This multi-model, multi-audit approach ensures our memory system remains accurate and trustworthy.

3. Optimization Techniques

A list of optimizations techniques you can pick and choose.

A lot of assumptions are being made here about retrieval times from storage, cache, Vector DB etc. Each system is unique so please make sure you test the perfomance of your architecture WITH and WITHOUT any optimizations. Numbers stated here are broad estimations.

Predictive Memory

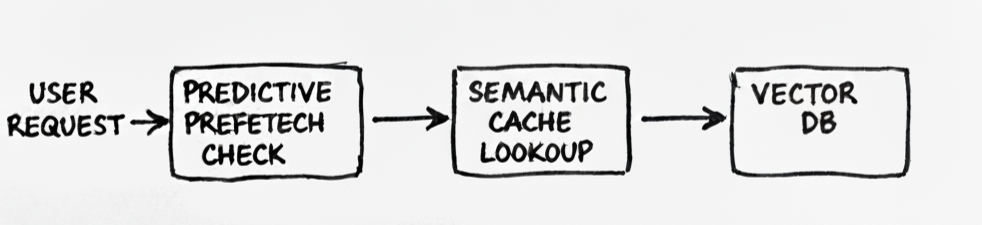

Core philosophy here is to try to predict the memories and rules / user behaviors that are going to be used on the next user query. Instead of doing this after the user has send their query - we prefetch to save on the response time.

Let’s assume we have a Redis DB that user to save those prefetched data Whenever a user does a query, we do the attribute extraction and we get the category of the query. We then can look up on the Redis DB for any potential matches. If we don’t find any or we find less than we want, we continue and query the Vector DB.

Semantic Caching

Semantic caching stores recently retrieved memory vectors in a fast in-memory layer (e.g., Redis) keyed by a hash of the query’s embedding, allowing most “similar” queries to return results instantly

This semantic cache layer can reduce vector DB calls by up to 90%, cut average retrieval latency from ~200ms to ~20ms, and dramatically lower operational costs.

“Generated using 🍌 Nano Banana by Google”

Assuming you use will use it in combination with predictive memory the Predictive Prefetch Check, the Hierarchical Memory Retrieval Flow will look like this:

- Predictive Prefetch Check

- Semantic Cache Lookup

- Vector Database Fallback

Metadata Filtering

Metadata filtering narrows down the search space before performing vector similarity, reducing compute and memory overhead. Idea is to search through as less memories as we can, rather than searching through 100% of the memories. Assuming you have selected the “category” attribute to be the filtering criteria, the logic can look like this:

Define Filter Criteria

Use structured metadata fields stored alongside embeddings, such as:user_idcategory(e.g. “technical_support”)timestamp(e.g. “last_6_months”)

Apply Pre-Query Filter

Construct a lightweight metadata query to limit candidates:filter = { "must": [ {"key": "user_id", "match": {"value": uid}}, {"key": "category", "match": {"value": attrs["category"]}}, {"key": "timestamp", "range": {"gte": "2025-03-20"}} ] }

Weighted Insertion

Weighted insertion gives each memory a dynamic importance score so that recent and high-impact entries are favored at retrieval time. Here’s how it works in simple terms:

Logic

New memories start with a base importance. Over time, their importance slowly decays. When a memory solves a user problem or receives positive feedback (e.g., user “liked” the response), we boost its importance.

Storage & Updates

We store the current importance score alongside each memory in the vector database. Importance is recalculated whenever a memory is accessed, when the user provides feedback, or during the nightly maintenance job.

Retrieval

At query time, the system ranks memories by combining their similarity to the query with their stored importance score. This ensures fresh, successful memories rise to the top while older or less useful ones fall naturally in the list.

Assumptions:

- Importance decay and feedback boosts run in the nightly batch to avoid real-time overhead.

- Memory accesses during peak hours may trigger on-the-fly recalculation for very high-value entries.

- Feedback events (likes, confirmations) immediately flag a memory for reweighting in the next batch.

| Technique | Before | After | Performance Gain (estimated) |

|---|---|---|---|

| Predictive Memory | Cold search every query | Pre-loaded context | 17% lower latency |

| Semantic Cache | All queries hit DB | 89% cache hit rate | 90% faster retrieval |

| Metadata Filtering | 47k vector search | 1.2k pre-filtered | 72% faster search |

| Weighted Insertion | Equal memory weights | Recency × relevance | 38% better relevance |

4. Long-Term Maintenance Workflows

Daily Jobs: Memory Linking & Rule Discovery

Memory Linking:

Each night, the system compares embeddings of newly created memories against the existing memory graph. It establishes semantic links when similarity exceeds a threshold, temporal links for entries within the same session window, and thematic links for shared keywords. These bidirectional connections enrich retrieval paths, enabling multi-hop context exploration.Rule Extraction:

The system processes all user queries and assistant responses from the day through a pattern-detection pipeline. It identifies recurring behaviors—such as requests for code samples or performance data—and generates or updates user rules stored with fields for condition, action, confidence score, and timestamp. This ensures future responses automatically reflect observed user preferences.Batch Upsert:

After linking and rule extraction, the updated memory links and new or modified rules are written back to the vector database and rule store in bulk. This scheduled batch operation maintains graph integrity, refreshes cached indices, and keeps the agent’s knowledge and behavior models in sync with the latest user interactions.

Weekly Jobs: Compression & Pruning

0–7 Days (FP32)

Fresh memories remain in full precision to preserve maximum detail and retrieval accuracy.

No compression costs are incurred, ensuring immediate recall quality.

7–30 Days (FP16)

Memories older than a week are converted to half precision, halving storage with minimal accuracy loss.

This reduces memory footprint by ~50% while maintaining ~99% semantic fidelity.

30–90 Days (INT8)

Medium-aged memories undergo 8-bit quantization, cutting vector size by 75%.

Retrieval accuracy remains above 97% for most similarity searches.

90+ Days (DMC 75%)

Long-term memories are dynamically compressed by 75%, using algorithms like PCA or autoencoders.

This aggressive reduction frees up storage and speeds up index scans with ~94% overall recall accuracy.

5. Production Metrics & Observability

Query Latency (P95: 3.2s)

Measures the 95th percentile of end-to-end response time; ensures most responses return under 4 seconds, maintaining a responsive user experience.Memory Relevance (91.7%)

Percentage of times top-10 retrieved memories are deemed relevant; a value above 90 % indicates the system consistently selects contextually appropriate information.Cache Hit Rate (89%)

Proportion of memory retrievals served from in-memory caches (predictive or semantic) versus the vector database; an 89 % hit rate significantly reduces expensive DB queries.Storage Efficiency (67% Reduction)

Aggregate savings achieved by compression, pruning, and metadata filtering; a 67 % reduction means less disk usage and lower storage costs without sacrificing recall accuracy.

6. Future Directions

Multimodal Memories: Store and retrieve images, code screenshots, and diagrams alongside text memories.

Collaborative Memory Networks: Team-shared knowledge graphs where multiple users contribute to collective learning.

Contextual Forgetting: Intelligent memory decay that automatically prunes outdated information while preserving valuable insights.

References & Sources

A-MEM: Agentic Memory for LLM Agents

https://arxiv.org/abs/2502.12110From Human Memory to AI Memory: A Survey on Memory Mechanisms in the Era of LLMs https://arxiv.org/abs/2504.15965

Optimizing AI Agent Performance: Advanced Techniques and Tools for Open-Source Agentic Frameworks in 2025 https://superagi.com/optimizing-ai-agent-performance-advanced-techniques-and-tools-for-open-source-agentic-frameworks-in-2025/

How Does Semantic Caching Enhance LLM Performance? – GigaSpaces

https://www.gigaspaces.com/blog/semantic-caching-enhance-llm-performanceTowards Universal & Efficient Model Compression via Exponential Torque Pruning https://arxiv.org/abs/2506.22015

Aligning LLM Agents by Learning Latent Preference – NeurIPS 2024

https://proceedings.neurips.cc/paper_files/paper/2024/hash/f75744612447126da06767daecce1a84-Abstract-Conference.htmlKey Access Patterns | VectorHub by Superlinked

https://superlinked.com/vectorhub/building-blocks/vector-search/access-patternsUnderstanding RAG Part VII: Vector Databases & Indexing – MachineLearningMastery

https://machinelearningmastery.com/understanding-rag-part-vii-vector-databases-indexing-strategies/A Practical Deep Dive Into Memory for Agentic Systems – DailyDoseofDS

https://www.dailydoseofds.com/ai-agents-crash-course-part-8-with-implementation/

](https://alexspyropoulos.com/images/memory.png)