I attended AI Engineer Paris 2025 and here are my key takeaways, new tools and concepts I learned. In general I was pleasantly surprised with the talent density in this event!

This blog reflects my personal views only; they do not represent the views of any employer.

What’s hot right now:

“Generated using 🍌 Nano Banana by Google”

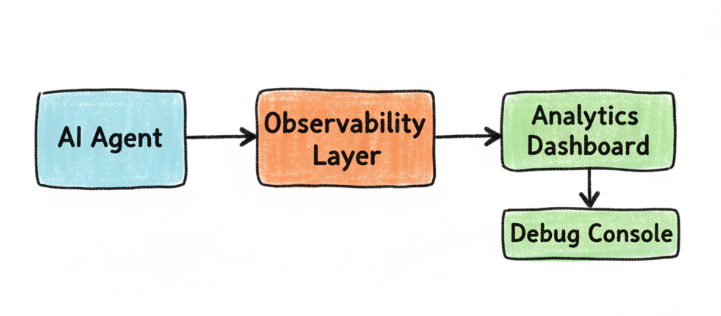

Observability all day, every day

The Agentic world is maturing. Multiple companies are building Observability features into their existing products. It feels table stakes - but it’s still early. Let’s take Sentry for example: if you are already a customer, it’s only natural you will use their new product for debugging and observing your AI Agents. I predict that almost every player that’s into the Product Analytics and the Observability space will ship a product about Agents in the next months (if they haven’t done it already) - for now I don’t see a lot of innovation apart from that.

From one point of view - Agent Observability feels like a commodity - In reality, it’s very similar to web app analytics—it’s difficult to innovate when you are providing graphs, error codes, time execution stats, etc. They are super important and much needed, of course—but so are the analytics in a simple web app. Someone will build the “Mixpanel for Agent Observability” in the coming months - an Agent Native Analytics Platform.

Another problem that arrise is the fragmentation in the Observability space for your Agents. Imagine your team using 5+ products - it will be a mess. Someone will build the “Segment” for Agent Observability—where you can send your data to one provider and ensure capture, and then forward your data to whichever end product you want. [inner_monologue_starts] Maybe I should build that product!? [inner_monologue_ends]

“DIY Cloud”

The castle of the big Cloud providers is showing cracks. Not really thought but it’s interesting to see the following: multiple companies are building specialized compute services on their own bare metal and provide serverless Lambda style infrastructure. The catalyst for that move seems to be the microVM project Firecracker . It’s not really a prediction because this space is already booming but for sure I expect more companies to popup in the next months and start eating small revenue points from the big Cloud Providers.

MCP is growing up?

Despite the insane ratio of MCP server to MCP user (POST) - multiple companies are trying to solve different problems: Discovering a server’s potential actions and authorization with 3rd party services/Other MCP servers. On the monetization front, Apify’s product is interesting. They are trying to build the Substack/Shopify of MCP servers— by giving monetization tools to creators and an easy way for consumers to pay for consumption. Maybe Specialized MCP servers is the next “Notion Template sidehustle”?! “How to make 10K/month w/ a Dropshipping MCP server” Youtube video coming in the next 2 years.

What’s hot in the coming months:

- Tooling for Agents: Observability, unitesting, compliance testing, maintainability

- Tooling for MCPs servers: deploying, monetization, authorization

- Purpose-built agents for existing products (e.g., Datadog agent for taking action on an error threshold).

After the disappointment that was GPT-5, people seem to have gone back to focusing on moving the needle forward in the fundamental building blocks of Agents and MCP servers.

Companies & Tools that got me interested

- Docker Hub MCP Server: A New Way to Discover, Inspect, and Manage Container Images

- MicroVM : Open Sourced AWS Lambda virtual machine - a lightweight virtual machine between containers and full VMs.

- roocode: Open Source coding agent

- cagent : Build AI Agents - Docker file style

- daytona.io: Secure and Elastic Infrastructure for Running Your AI-Generated Code.

- Light LLM: inference and serving framework

Concepts i learned

“Generated using 🍌 Nano Banana by Google”

- Thinking traces: The reasoning steps of an LLM. They are exposed on the OpenAI’s new Responses API.

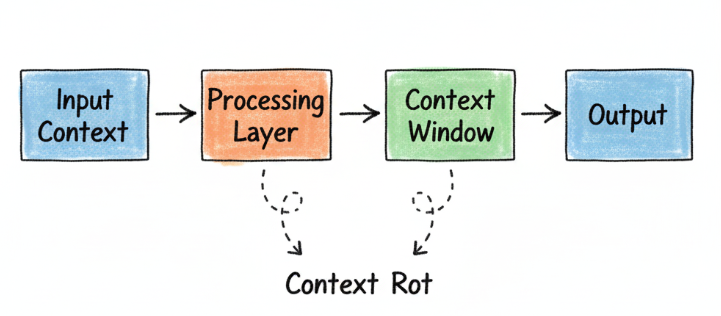

- Context rot: The performance drop in LLMs with longer context inputs.

- Needle in a haystack: hide a small fact (the “needle”) inside a very long context (the “haystack”). Then ask the model to retrieve that fact. It benchmarks how effectively models use their context windows.

- Speech diarization: It’s not TTS, it’s splitting audio into segments by speaker.

Thanks for reading!